This demonstrates in a really layman-understandable way some of the shortcomings of LLMs as a whole, I think.

An LLM never lies because it cannot lie.

Corollary: An LLM never tells the truth because it cannot tell the truth.

Final theorem: An LLM cannot because it do not be

I would say the specific shortcoming being demonstrated here is the inability for LLMs to determine whether a piece of information is factual (not that they’re even dealing with “pieces of information” like that in the first place). They are also not able to tell whether a human questioner is being truthful, or misleading, or plain lying, honestly mistaken, or nonsensical. Of course, which one of those is the case matters in a conversation which ought to have its basis in fact.

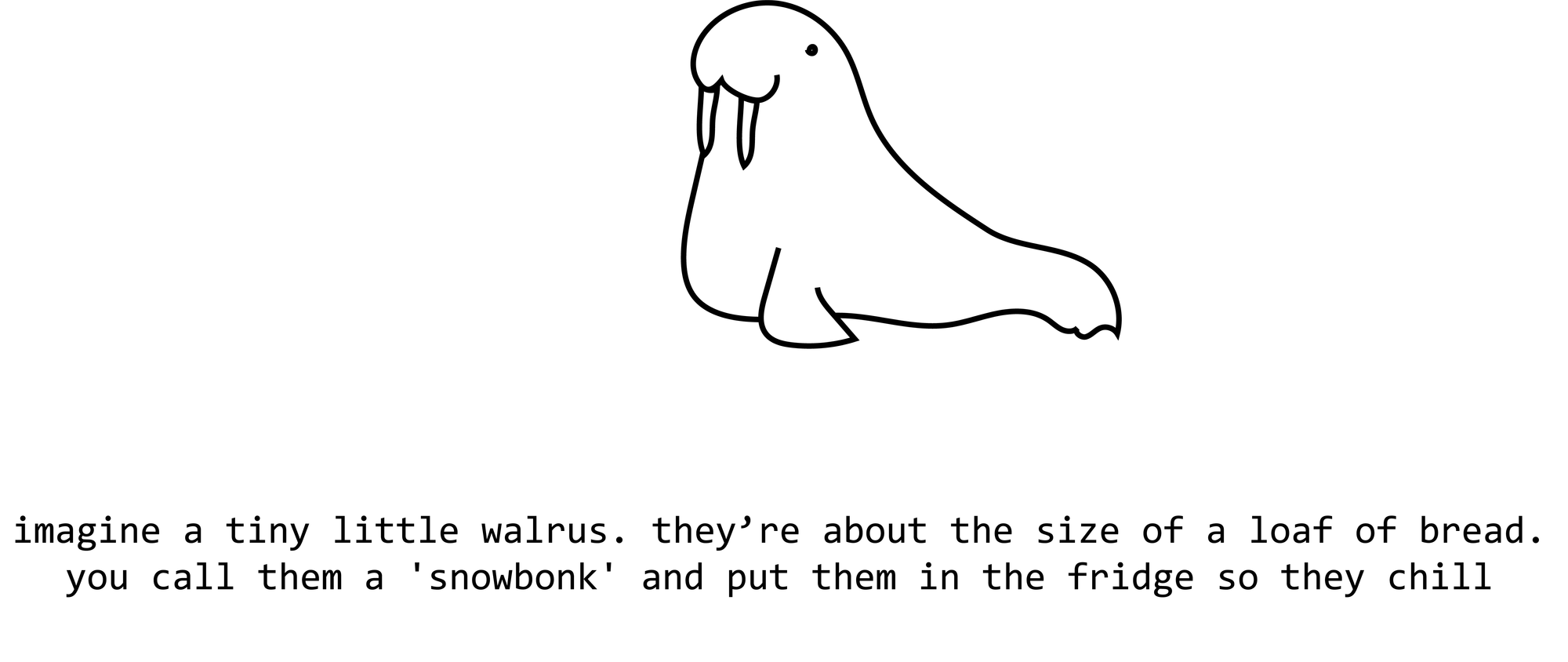

Damn the snowbonk is kinda cool though. They’re a cutie

So, just like real people, AI hate telling people I don’t know.